Deployment

After creating and publishing a model, deploy it to a repository, where it will appear on the Repositories page. The deployed model gives Boomi DataHub instructions on managing incoming and outgoing entities and creating golden records based on your model's design.

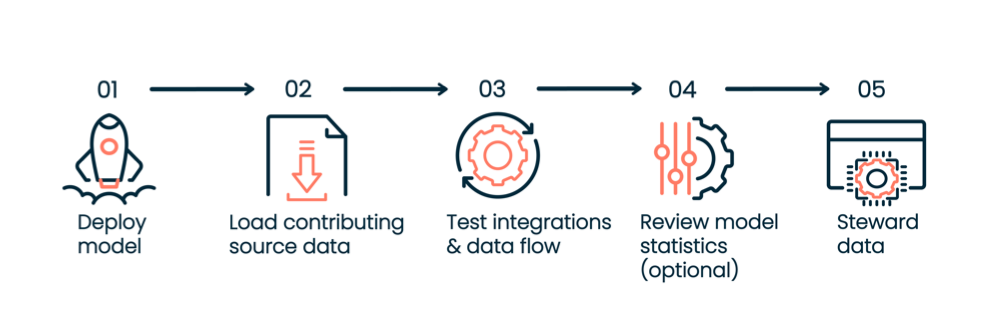

Deployment process

You can follow this process when deploying a model. You can initiate post-deployment actions on the Repositories page or through the DataHub APIs.

- Deploy a published model to the repository.

- Enable initial load and load data for each contributing source. We recommend starting with the source that has the most trustworthy data. To load data, you must prepare your integrations to transfer data from source systems into Boomi DataHub and create golden records. For an integration example, review Building an integration process for initial load. You can load data directly into the repository through deployed integrations or into staging areas as an optional step. A staging area allows you to preview contributions from source entities and verify that source configurations meet your desired data management objectives before committing the data to the repository for incorporation into golden records.

- Test and deploy integrations to check that source record updates from sources are routed to the repository. Test and deploy integrations that send batches of source record update requests specifying create, update, and delete operations are routed from Boomi DataHub to the accepting source. Review Synchronization articles for integration examples.

- Optional: After data is in the repository, review model statistics to help make model design decisions and identify data quality issues.

- After deploying your integrations for data synchronization, schedule the processes to run at regular intervals. Steward data as it flows to and from DataHub through integrations and verify data remains synchronized.

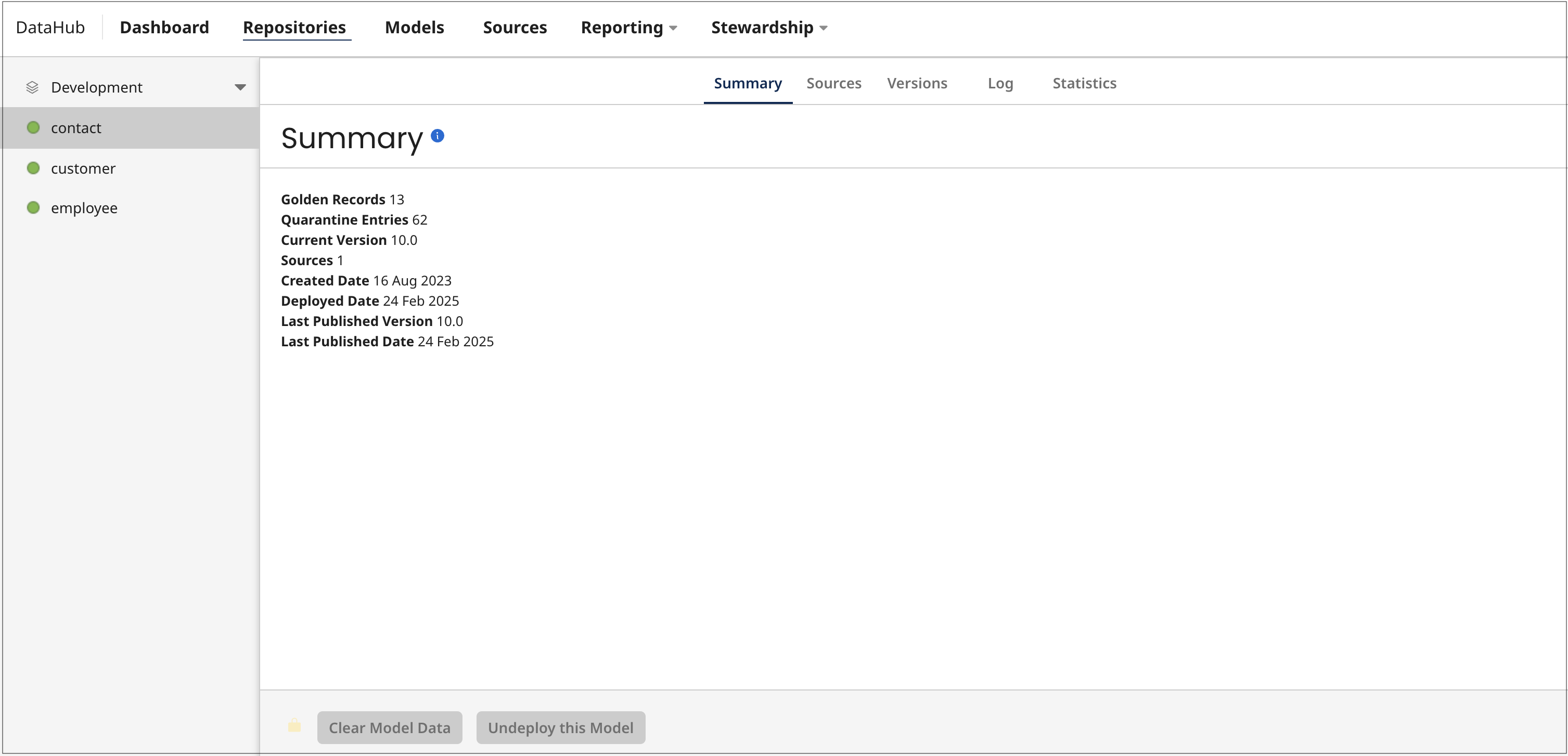

Deployment summary

The domain (deployed model) Summary tab shows the following statistics about the domain:

- Golden record count

- Quarantine entry count (if applicable)

- Current deployed version number

- Number of sources attached to the model

- Number of pending batches of incoming source entities not yet processed

- Number of outgoing source record update requests for which the repository has not received acknowledgement from integration processes.

Source configurations

If you add source configurations to the model before deploying, those configurations transfer to the deployed model (domain). You can also configure and edit sources later in the deployed model.

You can sync changes between your deployed model and the original model design. You can either revert the deployed model to match the original settings or import the deployed model’s settings into the original model. Read Source attachment and configuration for more details.

Initial loading of source data

You must enable Initial Load mode for each contributing source one at a time. Only one source can send data while in this mode. Activate Initial Load mode using the Sources tab's user interface or the DataHub Platform API. After enabling it, run the source’s integration process to load all records and data into the repository. If there is a source system of record for all fields in the domain model, load that source’s data first. Read Loading data from a source and Source attachment and configuration to learn more.

Testing match rules

You can test and preview how match rules quarantine a batch of source entities using the Match Entities API endpoint or the Boomi Data Hub connector’s Match Entities operation. You can optionally test how match rules apply to a batch of incoming source entities to identify potential duplicate records and adjust the match rules as needed. The Match Entities API endpoint and the Boomi Data Hub connector’s Match Entities operation provide match results for each entity in the batch. They also return metadata about fuzzy matching, including match strength and threshold, which helps you decide if you need to adjust the fuzzy match settings in the model. This testing is useful when you have existing golden records in a deployed model and want to ensure the match rules work effectively before onboarding a new source.

Staging entities for testing

You can test and preview how source entities affect the golden record data before committing it to the repository. Create a staging area for each contributing source you want to preview. You have the option to use the Repository APIs, and Platform API staging endpoints, or you can use the user interface to manage staged entities.

Model version management

After you change the model design, you can deploy new or older versions of the model to the repository. Model versions are helpful when you modify match rules after testing and want to deploy those changes to the repository. Read Deploying a newer or older version of a model to learn more.

Source ranking

When you have more than one contributing source, you can indicate which contributing source has the most trustworthy data. You can apply source ranking to each field in a deployed model so that Hub knows which source entity can override a less trustworthy source entity. Read Configure source rankings to learn more.

Setting a default source

You have the option to set a default source when you have a model that has a reference field (a reference model). Setting a default source in a reference model is helpful when the primary model doesn't have a specific reference value/source entity ID pairing to use as the value since the referenced source is not attached. By choosing a default source in the reference model, the primary model can simplify data resolution by automatically using the source entity ID linked to that default source.

Setting a default source ensures that golden records consistently use the same source for reference field values. You can set the default source in the model or in the deployed model (Repository > Deployed Model > Sources tab). Read Setting the default source to learn more.

Model statistics

You can view golden record field value statistics for a deployed model on the Repositories page. The Statistics tab shows metadata, including the number of rows, columns, reference fields, resolved references, and more. These statistics help your organization with model design decisions and identify data quality issues. Read Viewing golden record field statistics to learn more.

Audit log

DataHub logs user actions and system responses for each deployed model. You can view logs for a specific model or for multiple models within a repository. Review audit logs in the user interface or by querying the Platform API’s audit log object. Read Audit log to learn more.